Tuesday, December 6, 2011

I See Dead Code

All this unused code was a major nuisance for over a year. It would cause grep to show too much information -- most of it meaningless -- and it would even cause your IDE to jump to the old unused class file, rather than the one you actually wanted.

People were afraid to remove this code for fear of breaking things. After all, it was tied to our main shared library that could cripple over 200 of our websites at once. But enough was enough. I decided to embark on removing it. I have a lot of experience with removing dead code but rarely this high of a risk and with so much of it. I had only one day to figure it out, delete it, and test it. Otherwise, it would have to have be rolled back, for who knows how long, until anyone would attempt to remove it again.

Before removing code like this, I ask myself if it's good code that we might want to start using soon - or even right now under a configuration option. If it's useful code I wouldn't just delete it. Rather, I'd extract it out into classes that could become part of our library.

In my case it really was all just useless code. Some programmer from long ago had been a little too happy to copy and paste code to new files, leaving the old dead files lying around.

Some other code was living in live files but was effectively in a "dead block" because it would only happen under a condition that would never happen. I decided to remove this as well because such code will only confuse programmers every time they see it. In fact, I was about to spend time converting it to work with our new platform. What a waste of time that would have been! Fortunately, dead code like this is often easy to determine as dead, because you go to refactor it and can't figure out how to get it to run under that condition from the user interface. This is why it's we shouldn't rely solely on unit tests.

A coworker suggested that I keep the condition in the code at first, replacing the body of useless code with code to log a message such as "This condition should never happen." This way we could monitor the logs once it was deployed for a while, and really make sure the code wasn't being used by anything.

The easiest dead code to spot is commented-out code. Go ahead and remove this any time you see this. It will only sit there forever, no one will ever uncomment it, and if they really do need it that's what source control is for.

For those times that it's hard to tell if code is dead or not, the main tool I used to figure it out is grep. More specifically I use ack-grep. The key is to trust your grepping abilities and be extremely thorough. If you can prove that certain code isn't being referenced via other files, that's a huge step toward having the confidence to remove it.

Of course, it's not always that easy. Sometimes the code is referenced but that's okay; replacing it to use the proper files instead is certainly worth the effort.

Once you have removed the code, and all is working in your development sandbox, it's time to deploy it. It really isn't much different than writing new code or refactoring existing live code. Any time you commit code it could cause other things to break. This is why we do regression testing.

I highly recommend that you wait to push this kind of change out when there's no other important code that needs to be deployed too. If the "new" code is found to not be in a working state, then your code removal attempt will be the first source of blame and you will likely have to rollback and risk never getting to try again -- even if it wasn't the problem.

So go ahead and remove that dead code the next time you see it. You'll thank yourself later and so will your coworkers.

Sunday, November 20, 2011

Math and Algorithm Programming with Project Euler

From the official website for Project Euler:

Project Euler is a series of challenging mathematical/computer programming problems that will require more than just mathematical insights to solve. Although mathematics will help you arrive at elegant and efficient methods, the use of a computer and programming skills will be required to solve most problems.

The way it works is you register for free on the site then it gives you problems such as "Calculate the sum of all the primes below two million." Your task is to implement an algorithm in the programming language of your choice to give you the answer. Once you have the answer, you paste it into the web site and then it tells you if you got the answer correct or not. If you answered correctly it gives you the ability to view other programmer's source code for how they did it and even submit your own if you want to.

I recommend doing some of the problems as code katas. A code kata is a way of practicing typing out low-level programming problems over and over until it becomes second nature to you--just like doing doing martial arts katas helps martial artists. I still remember some of the katas from martial arts that I haven't done in over 10 years. Repetition truly is the mother of all skill.

Another thing that doing Euler problems will help you with is learning how to optimize algorithms. The speed of the machine your program runs on will largely affect the speed of your programs, but you often don't realize it until you're doing an algorithm on some large numbers. Fortunately, many Euler problems will really push you and your machine.

You optimize the algorithms by benchmarking the program and refactoring it to run faster and faster. Sometimes you'll need to use a different math library to speed things up. For example, bcmath is a lot faster than the regular math functions in PHP for me.

How do you benchmark? The easiest way is to use the time command, which is available for Unix-like operating systems such as Linux and OS X:

$ time ./my-programYou should also try profiling your solutions. Ruby comes with a built-in code profiler if you give it the -rprofile argument, but it's rather slow. I prefer to install ruby-prof. So I can profile with:

$ ruby-prof ./my-program.rbProfiling is great because it will tell you exactly which part of your code is taking the longest to run, so you can focus on optimizing just the bottlenecks.

I highly recommend solving the same problems in different languages. This will not only help teach you other languages, it will show you how much faster or slower a language is compared to other languages. It will also show you how expressive one language is compared to another. Sometimes I use the exact same algorithm in C as I do in PHP, and the syntax is almost completely identical, only the C version runs light years faster, of course.

It's worth running your solved problem using different versions of the same language. For example, PHP 5.3 solves my Euler problems a lot faster than php 5.2. Ruby 1.9 solves them a lot faster than 1.8, and so on.

I solved a lot of Euler problems between 2007 and 2010. When I recently looked back at some old problems I solved, one thing that I'm really glad I did back then was write comments to myself in each source file telling me: 1) How long it took me to solve the problem 2) How fast it ran on whatever machine and language version I was using at the time and 3) which language I solved it in first.

One last thing; make sure you go to projecteuler.NET and not the .com. The .com is a really annoying spam website.

Thursday, October 27, 2011

Learning CoffeeScript With Unit Tests

CoffeeScript is a language that transcompiles to JavaScript and has syntax that looks more like Ruby or Python. In other words, it allows you to write JavaScript without writing JavaScript and with a lot less code. The generated JavaScript code even runs as fast, if not faster, than if you had written pure JavaScript.

I don't mind writing pure JavaScript but since I really like Python and Ruby, I figured I'd give a try since the syntax is familiar. CoffeeScript can even make jQuery even easier to write than it already is.

My Coworker Danny turned me on to using Koans to learn Ruby, and when he mentioned there's a CoffeeScript version, I immediately jumped on it.

Koans can aid in learning a new programming language. They're a Behavior Driven Development approach that turn learning into a kind of game where you fill in the blanks to make tests pass.

A CoffeeScript Koan consists of CoffeeScript code with Jasmine unit test files. The tests look like:

# Sometimes we will ask you to fill in the values it 'should have filled in values', -> expect(FILL_ME_IN).toEqual(1 + 1)You then compile the file (in this case AboutExpects.coffee) using the command-line coffee tool:

$ coffee -c AboutExpects.coffeewhich in turn creates the corresponding JavaScript file (in this case AboutExpects.js). If we were just writing CoffeeScript code, we could then run the .js file in a Web Browser, as usual. Or even execute the JavaScript file without a browser, if you have node.js installed, which we'll do in a minute:

$ node yourFile.jsHowever, since we're doing Koans, we'll load the file using the bundled "KoansRunner.html" file, which loads our compiled .js file using the Jasmine Behavior Driven Development framework. This let's us know if our tests passed or failed.

Let's start by installing the CoffeeScript and the command-line compiler. Note that modern Ruby on Rails installs should already have CoffeeScript. For this tutorial I've only tested the following in Ubuntu Linux, but it should work for most Unix-like Systems. But you're always free to check for bundles for your system if you want to make it a little easier on yourself.

The coffee command-line tool is dependent on Node.js, so we'll need to install that first. Currently, the best way to install Node is to compile it yourself. So download it via the tarball or github repository, then run the usual:

$ ./configure && make && sudo make installNow that Node is installed, we'll install the Node Package Manager (NPM):

$ cd /tmp $ curl -O http://npmjs.org/install.sh $ sudo ./install.shNow you can install the coffescript command with:

$ sudo npm install -g coffee-scriptOnce installed, typing coffee should show an interactive prompt like:

coffee>Type Ctrl-D to exit.

You're now ready to install the CoffeeScript Koans from the github repository. Assuming you're working from ~/src/:

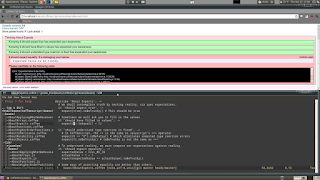

$ mkdir ~/src/koans $ cd ~/src/koans $ git clone https://github.com/sleepyfox/coffeescript-koans.git $ cd ~/src/koans/coffeescript-koans/In the above screenshot image in this article, you can see I've split my Linux screen in half, with the top half a Web Browser running KoansRunner.html. The bottom half is AboutExpects.coffee open in Vim.

Note that the answers to the first two Koans are already filled out in my example. They're so overly simplistic that just by understanding those two answers you'll have learned as much as you otherwise would have, so don't feel cheated.

So now it will be your job to open koans/AboutExpects.coffee and fill in a test method with what you assume is the correct answer. Then compile it with:

$ coffee -c koans/AboutExpects.coffeeand move the generated JavaScript file to the lib/koans directory and reload the KoansRunner.html file to see the new test results. Once you complete all the Koans in AboutExpects.coffee, you'll move onto the next Koans file, AboutArrays.coffee, and so on.

There are a couple of tips for making compiling and reloading easier. One tip is that at the root of the Koans directory, you can run a command called "cake", which was installed earlier during the coffee install process. You can verify this with:

$ file $(which cake) /usr/local/bin/cake: symbolic link to `../lib/node_modules/coffee-script/bin/cake' $ file -L $(which cake) /usr/local/bin/cake: a node script text executableRunning:

$ cake buildwill compile all of the tests in the koans/ directory and automatically cp them to the lib/koans directory. This allows you to add a Vim keyboard mapping to your ~/.vimrc file, such as:

nmap <Leader>c :!cake build<CR>

which lets you recompile the Koans by simplying typing \c in Vim. The "Leader" key on my system is a backslash, YMMV. Just make sure to run the shortcut from the root of the Koans directory so it can find the build file.

If you have Ruby and RubyGems installed, there's an even easier way to do this, using a "watch" command. The coffee command-line tool comes with an option called '-w', which watches for modified timestamps of files and then re-compiles the files automatically when they change. And the Koans come with a nice extension to this by including Ruby files called koans-linux.watchr, koans-win.watchr and koans-mac.watchr, respectively. To use it you need to install the watchr gem:

$ gem install watchrNow, if you were on a Linux machine, say, you would run this in a terminal:

$ watchr koans-linux.watchrNow you can edit and save your CoffeeScript koans and just hit refresh in your browser. The cake build step is taken care of automatically by the watchr!

Another thing that's nice to be able to do, is check our CoffeeScript code for syntax errors before we try to run it. This is known as "linting". The coffeescript command-line tool comes with an option called "-l" that lets you lint your files, but only if you have jslint installed first. So install it with:

$ npm install jslintAt this point, you're breezing through the Koans and you start learning enough CoffeeScript that you actually start using it to write your JavaScript, and you open a coffee compiled .js file and you notice the JavaScript looks a little verbose. As a simple example, say you had written a Hello World function called hello.coffee like:

sayHi = (name) ->

return "Hello " + name

console.log sayHi("Ryan")

and you compiled it and opened the .js file and noticed it looked like:

(function() {

var sayHi;

sayHi = function(name) {

return "Hello " + name;

};

console.log(sayHi("Ryan"));

}).call(this);

As you might suspect, it's just there by default for global namespace cleanliness. You can either manually remove it, or compile your coffeescripts with "-b, --bare". Which would then look like simply:

var sayHi;

sayHi = function(name) {

return "Hello " + name;

};

console.log(sayHi("Ryan"))

There is other verbosity such as it always declaring all variables with the var keyword. However, there's no good reason to take them things out since it's actually great to have CoffeeScript do all the tedious things for us that we often skip doing.That's all for now. Please visit the main CoffeeScript and CoffeeScript Koans sites for more information.

Sunday, October 23, 2011

Approval Tests in PHP

Today, after hearing a talk by Llewellyn Falco, I decided to play with the Approval Tests library. There seems to be plenty of articles on using it with Java, .NET and Ruby but none on PHP, so I figured I'd write about it here.

Approval Tests are about simplifying the testing of complex objects, large strings, et al, where traditional Assert methods might fall short. The Library works with your existing testing framework, be it PHPUnit, Rspec, JUnit, NUnit, what have you. If you've never heard of Approval Tests before please read about them first and come back when you understand the basic concepts. This article focuses on how to use the Approval Tests library in PHP.

To install the Library I downloaded the tarball from the sourceforge download page and then unpacked it into my project's directory. It complained about me not having Zend_PDF so I installed the Zend Framework "minimal" addition. This required adding the Zend Framework's "Library" directory to my PHP include_path. You'll also need PHPUnit installed with PHPUnit_CodeCoverage.

Once installed, getting the approval tests library to work with PHP on my Linux box was tricky because (as of this writing at least) the PHP library seems to have no documentation and is rather incomplete. So I pulled up my sleeves and dug into the source code.

The automatic diff tool and the functionality to display a message for how to move the "received" file to the "approved" file didn't work. The reason is approvals/Approvals.php only had a case for 'html' and 'pdf' and defaulted to the PHPUnitReporter, which itself throws a runtime exception and thus short-cicuits the functionality that takes place in the approve() method.

To remedy this, I added a case for "txt" in the getReporter() method in approvals/Approvals.php. This got it to actually try to call the diff tool but it seems to assume you're using Mac OS X because approvals/reporters/OpenReceivedFileReporter.php does a system() call to the "open" command -- which in OS X will open files as if you double-clicked the file's icon but in Linux it runs 'openvt' -- which in this case will cause an error like "Couldn't get a file descriptor referring to the console." So I edited approvals/reporters/OpenReceivedFileReporter.php and changed:

system(escapeshellcmd('open') . ' ' .

escapeshellarg($receivedFilename));

to:

system("echo '#!/bin/sh' > /tmp/reporter.command; echo 'diff -u " .

escapeshellarg($approvedFilename) . " " .

escapeshellarg($receivedFilename) .

"' > /tmp/reporter.command; chmod +x /tmp/reporter.command;

/tmp/reporter.command");

That got everything working for me. I'm going to email the author about this and maybe submit a patch to get it working with vimdiff. For now "diff -u" was enough, as I was following the Do The Simplest Thing That Could Possible Work rule.

Now onto the PHP source code.

<?php

require_once 'approvals/Approvals.php';

class Receipt {

private $items;

public function __construct() {

$items = array();

}

public function addItem($quantity, $name, $price) {

$this->items[] = array('name'=>$name, 'quantity'=>$quantity, 'price'=>$price);

}

public function __toString() {

return $this->implode_assoc('=>', '|', $this->items);

}

private function implode_assoc($glue, $delimiter, $pieces) {

$temp = array();

foreach ($pieces as $k => $v) {

if (is_array($v)) {

$v = implode(',', $v);

}

$temp[] = "{$k}{$glue}{$v}";

}

return implode($delimiter, $temp);

}

}

class ReceiptTest extends PHPUnit_Framework_TestCase {

/**

* @test

**/

public function Should_create_a_new_receipt() {

$r = new Receipt();

$r->addItem(1, 'Candy Bar', 0.50);

$r->addItem(2, 'Soda', 0.50);

Approvals::approveString($r);

}

}

Running the test the first time creates the file:

ReceiptTest.Should_create_a_new_receipt.received.txt

in the current directory and fails the test. It's your job to view the generated txt file and decide if it looks right. If it does, rename it to:

ReceiptTest.Should_create_a_new_receipt.approved.txt

Now when you rerun the test it will diff the current test's output against the approved.txt file's. If they're equivalent, then the test passes, if they're not it doesn't and it's up to you to diff the received and approved files for what the differences are and figure out how to get your test to pass again.

For example, the first time you run the test, it will generate the "received.txt" with the string:

0=>Candy Bar,1,0.5|1=>Soda,2,0.5

If you rename the file to its approved.txt equivalent, change the quantity of Sodas to 3 and rerun the test, it will fail because it would create another received.txt to diff against the approved.txt but this time the received file will contain:

0=>Candy Bar,1,0.5|1=>Soda,3,0.5

I hope you approve this article.

Thursday, September 29, 2011

The Bowling Game Kata in PHP

If you run the tests like:

$ phpunit --testdox BowlingTest.phpyou should see the tests all pass like:

Bowling

[x] Should roll gutter game

[x] Should roll all ones

[x] Should roll one spare

[x] Should roll one strike

[x] Should roll perfect game

<?php

class Game

{

private $rolls = array();

public function roll($pins) {

$this->rolls[] = $pins;

}

public function score() {

$score = 0;

$rollsMax = count($this->rolls);

$firstInFrame = 0;

for ($frame = 0; $frame < 10; $frame++) {

if ($this->isStrike($firstInFrame)) {

$score += 10 + $this->nextTwoBallsForStrike($firstInFrame);

$firstInFrame++;

} else if ($this->isSpare($firstInFrame)) {

$score += 10 + $this->nextBallForSpare($firstInFrame);

$firstInFrame += 2;

} else {

$score += $this->twoBallsInFrame($firstInFrame);

$firstInFrame += 2;

}

}

return $score;

}

public function nextTwoBallsForStrike($firstInFrame) {

return $this->rolls[$firstInFrame + 1] + $this->rolls[$firstInFrame + 2];

}

public function nextBallForSpare($firstInFrame) {

return $this->rolls[$firstInFrame + 2];

}

public function twoBallsInFrame($firstInFrame) {

return $this->rolls[$firstInFrame] + $this->rolls[$firstInFrame + 1];

}

public function isStrike($firstInFrame) {

return $this->rolls[$firstInFrame] == 10;

}

public function isSpare($firstInFrame) {

return $this->rolls[$firstInFrame] + $this->rolls[$firstInFrame+1] == 10;

}

public function rollSpare() {

$this->roll(5);

$this->roll(5);

}

}

class BowlingTest extends PHPUnit_Framework_TestCase {

private $g;

public function setUp() {

$this->g = new Game;

}

/**

* @test

*/

public function Should_roll_gutter_game() {

$this->rollMany(20, 0);

$this->assertEquals(0, $this->g->score());

}

/**

* @test

*/

public function Should_roll_all_ones() {

$this->rollMany(20, 1);

$this->assertEquals(20, $this->g->score());

}

/**

* @test

*/

public function Should_roll_one_spare() {

$this->g->rollSpare();

$this->g->roll(3);

$this->rollMany(17, 0);

$this->assertEquals(16, $this->g->score());

}

/**

* @test

**/

public function Should_roll_one_strike() {

$this->rollStrike();

$this->g->roll(3);

$this->g->roll(4);

$this->rollMany(16, 0);

$this->assertEquals(24, $this->g->score());

}

/**

* @test

**/

public function Should_roll_perfect_game() {

$this->rollMany(12, 10);

$this->assertEquals(300, $this->g->score());

}

public function rollMany($n, $pins) {

for ($i = 0; $i < $n; $i++) {

$this->g->roll($pins);

}

}

public function rollStrike() {

$this->g->roll(10);

}

}

Sunday, September 18, 2011

Creating Code Snippets for any Programming Language

The bottom line is that snipMate will save you a ton of time and is far more powerful than simple code-completion. Some people spend a lot of money on individual IDEs that only work with one or two languages. In this article I will show examples of how Vim can create powerful snippets in C, PHP, Python, Ruby, JavaScript, HTML, and more.

The perfect first example of snipMate's capabilities is generating a for loop by typing nothing more than:

for[Tab]Which expands to:

for (i = 0; i < count; i++) {

// code

}

If you were editing a C language file, anyway. The snipMate plugin is context sensitive, based off of the file type you're editing. For example, if you were editing a PHP file instead, then typing for[Tab] would have produced a PHP for loop:

for ($i = 0; $i < count; $i++) {

// code...

}

What's more, is after expanding to a full for loop it then places your cursor on the first likely edit point - in this case it places your cursor on the word "count" and puts Vim in SELECT mode so you cans start typing to replace it with another word such as "$max", or whatever you require. If you don't want to change the selected word just hit [Tab] without changing it. Typing [Tab] again jumps you to the next edit point, which in this case goes back to the first "$i" in the loop. From there, if you were to type x, it would replace all the $i's in the loop with $x's. Typing [Tab] from there will jump your cursor to the "++" in the "$x++", in case you wanted to change it to something else like "+=2". Typing [Tab] one last time will take you down to the "// code" line. You get the picture. To scroll backwards, use [Shift+Tab], as you might expect.

You can even enbable multiple filetypes at the same time in Vim by chaining them with dots. For example:

:set ft=php.html

Will let you use both the PHP and HTML snippets on the file you're editing.

How does this work? Well, behind the scenes there are snippet files. In your ~/.vim/snippets/ directory exists the files: c.snippets (for C code), php.snippets (for PHP code), and so on. The plugin knows what filetype you're editing and then loads the corresponding snippet file based on the prefix of the file name. So if you're editing a PHP file, it looks in the snippets directory with file names starting with "php".

This means you can easily create your own custom additions. For example, to create more PHP snippets, you could either add them to the existing php.snippets file, or better yet, create your own new file called "php-mine.snippets". In it, you input snippets that don't come with the default snippets file. For example, I really wanted to be able to just type th[Tab] to create $this->. So in php-mine.snippets, I made the snippet:

snippet th

$this->${1}

It's important that you insert actual Tabstops (\t) when you indent in the snippets file. In Vim, typing Ctrl+v Tab will insert a real tabstop.The "${1}" in the snippet indicates to snipMate where you want the cursor to jump to after the snippet expands. In the above example, I'm telling it to land directly after the "->". To tell it where to jump when a user starts pressing [Tab] you simply place it where you want and give it the corresponding number. For example: "${2}", "${3}", etc. In order to highlight certain text when your cursor jumps there, then add a colon (:) after the number followed by the text to select: ${2:foo} will jump your cursor to the string "foo", and highlight it.

Note that if you create multiple snippets with the same name, then Vim will prompt you with a drop down selection list to chose from.

Another PHP snippet you might create is:

snippet substr

substr(${1:string $string}, ${2:int $start} ${3:[, int $length]})

Which expands to:substr(string $string, int $start [, int $length])

This is useful if you often forget the parameters certain functions take.

I also like to create another snippets file called "php-unit.snippets" which contains my PHPUnit framework snippets. In here, I have snippets like:

snippet test

/**

* @test

**/

public function ${1:}() {

${2:}

}${3:}

Which, typing test[tab], expands to:/**

* @test

**/

public function () {

}

A more involved example is my getMockBuilder() method snippet:

snippet getmockbuilder

${8:}$this->getMockBuilder('${1:string $OriginalCLassName}')

->setMethods(${2:array $methods})

->setConstructorArgs(${3:array $args})

->setMockClassName(${4:string $name})

${5:->disableOriginalConstructor(})

${6:->disableOriginalClone()}

${7:->disableAutoload()}

->getMock();

Expands to, when you type getmockbuilder[Tab]:$this->getMockBuilder('string $OriginalCLassName')

->setMethods(array $methods)

->setConstructorArgs(array $args)

->setMockClassName(string $name)

->disableOriginalConstructor()

->disableOriginalClone()

->disableAutoload()

->getMock();

Tip: To easily search through all the existing snippets of a .snippets file, type this search query in Vim:

/snippet \zs.*

Some useful Python snippets:

import = imp[tab] function template = def[tab] for loop = for[tab](Also see the Python code-completion plugin for Vim I wrote called Pydiction.)

Some other useful PHP snippets include:

php = <?phpSome Bash snippets:?> echo = ec[tab] fun = public function FunctionName() ... foreach = foreach[tab] if = if (/* condition */) { ... ife = if/else else = else { ... elseif = elseif () { ... switch/case = switch[tab] case = case 'value': ... t = $retVal = (condition) ? a : b; while = wh[tab] do/while = do[tab] Super Globals = $_[tab] docblock = /*[tab] inc1 = include_once req = require req1 = require_once $_ = List of $_GET[''], $_POST[''], etc globals = $GLOBALS['variable'] = something; def = define('') def? = defined('') array = $arrayName = array('' => ); /* = dockblock: /** * **/ doc_h = file header docblock doc_c = class docblock doc_cp = class post docblock doc_d = constant docblock doc_fp = function docblock doc_v = class variable docblock doc_vp = class variable post docblock doc_i = interface docblock

#![tab]expands to: #!/bin/bash

if[tab]expands to:

if [[ condition ]]; then

#statements

fi

For JavaScript:

get[Tab] = getElementsByTagName('')

gett[Tab] = getElementById('')

timeout = setTimeout(function() {}, 10;

...

For Ruby:

ea = each { |e| }

array = Array.new(10) { |i| }

gre = grep(/pattern/) { |match| }

patfh = File.join(File.dirname(__FILE__), *%2[rel path here])

...

In a previous article I mentioned using Zen Coding for super fast HTML creation. Well, you can also create HTML with snipMate. This is useful when you need to do something that Zen Coding cannot do. For instance, snipMate has all the HTML Doctypes:

docts = (HTML 4.01 strict)

doct = (HTML 4.01 transitional)

docx = (XHTML Doctype 1.1)

docxt = (XHTML DocType 1.0 transitional)

docxs = (XHTML Doctype 1.0 Strict)

docxf = (XHTML Doctype 1.0 Frameset)

doct5 = (HTML 5)

Other HTML snippets include:

head[tab]

title[tab]

script[tab]

scriptsrc[tab]

r[tab]

base[tab]

meta[tab]

style[tab]

link[tab]

body[tab]

table[tab]

div[tab]

form[tab]

input[tab]

textarea[tab]

mailto[tab]

h1[tab]

label[tab]

select[tab] and opt[tab] and optt[tab]

meta[tab]

movie[tab]

nbs[tab]

That's all for now. If you want to share snippets you made please link to them in the comments section below.

Saturday, September 17, 2011

Zen Coding Your HTML, XML and CSS

The plugin expands abbreviated code you write. For example, it can expand:

html>head>title

to:

<html>

<head>

<title></title>

</head>

</html>

Installing the Zen Coding plugin for Vim is as easily as downloading zencoding.vim and installing it to your $HOME/vimfiles/ftplugin/html Directory. Now when you're editing an HTML file (:set ft=html) you can type an abbreviation like:

html:5

Then, while in either insert or normal mode, type:

Ctrl+y,

Again, that's ^y followed by a comma. And that should expand the HTML 5 template:

<!DOCTYPE HTML>

<html lang="en">

<head>

<title></title>

<meta charset="UTF-8">

</head>

<body>

</body>

</html>

There's a lot more you can do with it such as repeating tags. For example, say you want an unordered list containing four list elements with a class of incrementing items. Typing:

ul>li.item$*4 Ctrl+y,

Should expand to:

<ul>

<li class="item1">_</li>

<li class="item2"></li>

<li class="item3"></li>

<li class="item4"></li>

</ul>

The "*" multiplies the element before with the number after it. And the "$" gets replaced by an incrementing number. Pretty cool, huh?

Notice the underscore inside the first LI tag. This is where your cursor would end up if you run this expression. Zen coding allows you to have jump to edit points. For example, typing "Ctrl+y n" would jump your cursor to to the next LI element. Typing "Ctrl+y N" jumps to the previous element.

Say you want to add a tag but not nest it. For example:

<p></p>

<a href=""></a>

Then just add a "+" (plus sign) between the tags to separate:

p+a Ctrl+y,

You can delete any tag you're in by typing: Ctrl+y k

To create any tag with a closing and end tag, just type the name of the tag:

foo Ctrl+y,

becomes:

<foo></foo>

To create:

<script type="text/javascript"></script>

Just type:

script Ctrl+y,

To make a DIV with an id of "foo": div#foo Ctrl+y,

To create a nested DIVs. You could, for example type:

div#foo$*2>div.bar Ctrl+y,

Which would expand to:

<div id="foo1">

<div class="bar">_</div>

</div>

<div id="foo2">

<div class="bar"></div>

</div>

To create tags that close themselves, such as a BR tag:

br Ctrl+y,

Becomes:

<br />

To comment out a block of HTML, just move your cursor to the start of the block of HTML and type: Ctrl+y/

If you visually select the block and type Ctrl+y, it will prompt for a tag to wrap the block inside of. You can even enter expressions here to wrap text into HTML. For example, if you had the text:

line1

line2

line3

You could highlight it and type Ctrl+y, ul>li* to convert it to:

<ul>

<li>line1</li>

<li>line2</li>

<li>line3</li>

</ul>

You can also apply "filters" at the end of your expressions. For example, adding "|e" will escape your HTML output so that >'s become <'s and so on. So typing a|e Ctrl+y, expands to:

<a href=""></a>

Another filter called "|c" adds HTML comments to important tags, such as tags with ID and/or classes. There's even a HAML filter.

I'll leave you with one more neat thing you can do with Zen Coding. You can add text to your expressions that will be placed in the corresponding position. For example:

p>{Click }+a{here} Ctrl+y,

Expands to:

<p>

Click <a href="">here</a>

</p>

Enjoy!

Friday, September 16, 2011

Refactoring with Vim Macros

There are some refactoring commands that you may wish to repeat in the Vim editor but don't want to make a permanent mapping for because they're only needed temporarily for the current file. And a complicated regular expression would take you too long to get right - especially with Vim's non-standard regex engine - and you may not feel it safe to run even if you did manage to figure it out.

Fortunately, Vim also supports macros. Knowing just the Vim commands that you already know, plus a couple of commands to record and play them, you can create powerful macros with very little effort.

To record a macro in Vim, type

qq to begin recording, followed by the series of commands to record, then "q" to stop recording. You then type @q to execute the recorded macro. Let's start with a very simple example. Say you have a file with a bunch of lines:

line1

line2

line3

line4

...

and you want to change the first letter of each line to be a capital letter. You might normally do this all manually by going to the beginning of each line and typing

~ (tilde in vim toggles the case of the character under the cursor). Then you might type j repeatedly to scroll down to each line and then type . to repeat the command. I've seen people do stuff like this on lines with 50+ lines and they just sit there like a robot typing the same thing over and over. Well, it doesn't have to be that way. Instead, you could just go to line1 and type:qq0~j<ESC>q

That will run the command on just the current line number and position you to the next line so you can easily run the macro. Note that the 0 (zero) I added to the macro is there to jump to the beginning of the line so we always only capitalize the first letter. Now you're ready to repeat the macro on the rest of the lines by typing:

3@q

The '3' is for the number of times to repeat the macro.

As another example say you need to edit an PHP file that has a bunch of ugly, yet similar, lines like:

if (@$FOO) someFunction($foo);

and you want to refactor these lines to be like:

if (isset($foo) && !empty($foo)) {

someFunction($foo);

}

to do this, you could type the following (Don't worry if you don't understand it yet, I'll break it down in a minute):

0f@xyeiisset(<ESC>f)i) && !empty(<ESC>pa)<ESC>la {<ENTER><ESC>o}<ESC>

so now all you need to do to this record it is add two q's to the beginning and one q to the end, like so:

qq0f@xyeiisset(<ESC>f)i) && !empty(<ESC>pa)<ESC>la {<ENTER><ESC>o}<ESC>q

Now you can put your cursor anywhere on a line containing the if statement you want to refactor and type

@q and it will run the macro. To repeat the macro, go to another line and type @@.Okay, let's break down the command. It may need to be tweaked depending on your Vim setup, but on my set up it works like this:

qq begins recording

0 jumps to the beginning of the line

f@ jumps to the @ sign.

x deletes the @ sign

ye yanks from the cursor to the end of the word (copies $foo)

i puts you in INSERT mode

isset( inserts the text "isset("

<ESC> escapes you back to normal mode

f) jumps to to the closing parenthesis

i) && !empty( inserts the text ") && !empty("

<ESC> escapes you back to normal mode

p pastes the text "$foo"

a) inserts the closing parenthesis

<ESC> escapes you back to normal mode

la moves your cursor passed the ")" and into insert mode

{ inserts the opening brace

<ENTER< inserts a newline, pushing "somefunc($foo)" to the next line

<ESC> escapes you back to normal mode

o jumps your cursor to the next line and puts you in insert mode

} inserts a closing brace (which should auto-indent depending on your setup)

<ESC> escapes you back to normal mode

q ends recording

This may seem intimidating on first glance and make you think it's more trouble than it's worth but the beauty of Vim is how quickly you can type commands. You just need to try it a few times to get the hang of it, trust me.

In the above examples I used

qq to begin recording. This is just a convention for single macros. You can actually store multiple macros at the same time by storing them in different registers. For example qa begins recording to the register a, which you can then run with @a. You could then create another macro with qb and run with @b, and so on.You can even run a macro on a specific range of lines, say 20 to 30, by doing:

:20,30norm! @q

Similarly, you could visually select just the lines you want to run the macro on and then type"

:norm @q

If you remember this simple strategy of recording each series of commands you plan to repeat, then you're golden. If you're going to have to type the commands either way, then recording them is very little effort compared to manually typing the commands over and over.

Friday, September 9, 2011

Readability Counts

It helps me to remember that coding is explaining. And not just to the computer, but to yourself and to others.

The nice thing is that you don't have to write perfect code (which is impossible anyway). It just needs to be understandable to you and others. If you can write code simply so that everyone can understand it, then you will come across as a much better programmer than you otherwise would.

The cleaner your code becomes the more side-benefits you will also notice such as having less fatigue when working with it and having less bugs!

If you run the python prompt and enter:

>>> import this

It will output "The Zen of Python" message by Tim Peters:

Beautiful is better than ugly.

Explicit is better than implicit.

Simple is better than complex.

Complex is better than complicated.

Flat is better than nested.

Sparse is better than dense.

Readability counts.

Special cases aren't special enough to break the rules.

Although practicality beats purity.

Errors should never pass silently.

Unless explicitly silenced.

In the face of ambiguity, refuse the temptation to guess.

There should be one-- and preferably only one --obvious way to do it.

Although that way may not be obvious at first unless you're Dutch.

Now is better than never.

Although never is often better than *right* now.

If the implementation is hard to explain, it's a bad idea.

If the implementation is easy to explain, it may be a good idea.

Namespaces are one honking great idea -- let's do more of those!

It's pretty sad that we still need to remind each other that readability counts (which was pointed out in the May of 2010 "Zen of Python" episode of the "From Podcast Import Python" podcast).

Sometimes the problem is that the code author has yet to read a book on how to write readable code. But they are definitely out there.

A book written in the 1970's by Brian Kernighan, a little before I was even born, entitled "The Elements of Programming Style" spoke of how the best documentation for a computer program is a clean structure. It also helps if the code is well formatted--with good mnemonic identifiers and labels. And a smattering of enlightening comments.

And again in 1999, Brian Kernighan coauthored another great book with Rob Pike called "The Practice of Programming" and outlined that the main principles are: "Simplicity," which keeps programs short and manageable; "Clarity," which makes sure they are easy to understand, for people as well as machines; "generality," which means they work well in a broad range of situations and adapt well as new situations arise; and "automation," which lets the machine do the work for us.

A few other seminal works, such as "The Pragmatic Programmer: From journeyman to master", Code Complete: A practical handbook of software construction", and of course Bob Martin's "Clean Code: A handbook of agile software craftsmanship", all go into great detail on how to write readable and maintainable code.

From my experience, a general rule is if you're looking at a piece of code and all you can say about it is "Boy, this sure is convoluted", then it's probably not clean code. If you can understand the code right away, it may or may not be well-written; perhaps you're just used to reading poorly written code. However, if it seems like the code is so nice that it actually gives you a good feeling -- or at least doesn't give you a headache from squinting -- then it's clearly readable code.

A few of the main guidelines for writing readable code are:

- Your functions / classes should be doing only one thing each.

- Your variable names should not require comments

- Use adequate whitespace and consistent indentation.

There are many more things you can do, and that differ between a normal code base and when designing APIs, and I refer you to the aforementioned books.

References:

59 Seconds: Change your life in under a minute

Science Daily

From Python Import Podcast

Saturday, September 3, 2011

Better Than Grep

I've been using ack instead of grep for about four years now and I'm still loving it. It has saved me countless time and energy searching through large code bases and miscellaneous files and directories. Everyone who has ever used grep knows how useful and necessary a tool it is, so anything that might potentially be better than it is certainly worth a try.

Description from ack's home page: ack is a tool like grep, designed for programmers with large trees of heterogeneous source code. ack is written purely in Perl, and takes advantage of the power of Perl's regular expressions.

So what exactly makes ack better than grep? The main thing for me is that it requires much less typing to do common powerful operations. It's designed to replace 99% of the uses of grep. It can help you avoid complex find/grep/xargs messes and the like.

On the very surface, the two commands print lines that match a pattern:

$ grep rkulla /etc/passwd

rkulla:x:1000:1000:Ryan Kulla,,,:/home/rkulla:/bin/bash

$ ack rkulla /etc/passwd

rkulla:x:1000:1000:Ryan Kulla,,,:/home/rkulla:/bin/bash

In order to get grep to print with colored output you need to add --color. Which you could just make a shell alias to do implicitly. Ack will show colors automatically.

Where ack really shines is that it searches recursively through directories by default, while ignoring .svn, .git, and other VCS directories. So instead of typing something silly like:

$ grep foo $(find . -type f | grep -v '\.svn')

You could simply type this instead:

$ ack foo

ack automatically ignores most of the crap you don't want to search, such as binary files.

Also, instead of typing shell globs like *.txt to search just text files, ack uses command line arguments such as --text. If you were to grep for 'foo' in *.txt, it would only consider files that ended with exactly .txt, whereas ack would consider .txt, .TXT. .text and even text files that don't have file extensions such as README. Conversely, you could type --notext to search for everything except text files. To see a list of the different file type arguments and which file types they affect type:

$ ack --help=types

--[no]html .htm .html .shtml .xhtml

--[no]php .php .phpt .php3 .php4 .php5 .phtml

--[no]ruby .rb .rhtml .rjs .rxml .erb .rake

--[no]shell .sh .bash .csh .tcsh .ksh .zsh

--[no]yaml .yaml .yml

...

This is obviously incredibly convenient for any user. It's especially convenient for someone like a System Administrator who may be tasked with searching through all the "Ruby" files on a system, yet he might not know all about all the different file extensions that Ruby might use. With ack, all he has to remember is --ruby.

Use -a to search all files.

Ack will also give you more readable output than grep. It places things onto newlines so you can more easily discern the file name, line number, and pattern matches.

Because ack is written in Perl, it can take advantage of some of the language's features, such as proper Perl Regular Expressions and literal quoting. You can use -Q or --literal with ack to quote literally, similar to q() in Perl. From the ack-grep man page:

If you're searching for something with a regular expression metacharacter, most often a period in a filename or IP address, add the -Q to avoid false positives without all the backslashing.

Use ack-grep to watch log files Here's one I used the other day to find trouble spots for a website visitor. The user had a problem loading troublesome.gif, so I took the access log and scanned it with ack-grep twice.

$ ack-grep -Q aa.bb.cc.dd /path/to/access.log | ack-grep -Q -B5 troublesome.gif

The first ack-grep finds only the lines in the Apache log for the given IP. The second finds the match on my troublesome GIF, and shows the previous five lines from the log in each case.

You can do a lot of other cool stuff with ack that I won't go into because if I haven't convinced you to use ack by now, I never will. Consult the documentation for ack-grep if you like what you see.

Saturday, August 20, 2011

Knowing What to Unit Test

The main thing that seems obvious to test is the public API of what your class does. If your class extracts email addresses from strings and files then it might seem obvious to have tests like Should_extract_email_addresses_from_string() and Should_extract_email_addresses_from_file().

That is all well and good but if you follow the BDD principle Test behavior not methods then you wouldn't merely be testing at a 1:1 ratio between your test methods and MUT (Methods Under Test). You often need more than one test per MUT because you need to test different things about it. For example, just because you have a method called blockFollower() and have the corresponding test method Should_block_follower(), it doesn't mean you shouldn't have additional test methods like: Should_not_block_all_followers(), Should_not_block_who_you_are_following(), and so on.

Another way to figure out what to test is to think not only of the positive tests but also the negative tests. If your method should throw an exception if you give it certain data (such as no dat) then have a test like: Should_throw_exception_if_input_is_null().

Take this a step further and think of all the behavior your code should exhibit. Suppose your process should continue to run even if there's an error. You can make a test for too: Should_continue_running_even_if_there_is_an_error().

Whenever you're refactoring, you should also be unit testing. If the legacy code you're refactoring doesn't have unit tests, be sure to avoid regression by adding test coverage around it before you try to change it.

There's no limit to what you can test. Even if your class has a method that sends mail you can still put tests on it. One way to do this is to have a test method that checks if the method was simply called. You don't have to test that it returned something "in real life" if it's not possible. If your method is called sendMail() then you could have a test called Should_send_mail() and at least test that the sendMail() method is successfully called with whatever input you give it; you're testing that the arguments it takes work properly, etc.

Look again at your MUTs whenever you feel you have all the tests you need. Are they really designed to only do one thing each? When the answer is no, break them down into smaller pieces and put tests around those pieces, or at least around the groups of pieces if they all constitute a single behavior.

Perhaps you don't even have methods because no one bothered to put the code in a class or even procedural functions to begin with. I see this all the time, especially with PHP code. If that's the case it's time to start extracting as many classes and methods as you can and put those under test. It helps to think about the structure of the code. Look for code smells like globals and lack of Dependency Injection. Aim for functional decomposition.

Languages that aren't strictly typed, such as PHP, also presents you with some new unit test ideas. Type Hinting in PHP will only go as far as arrays and objects, but with a little creativity you create tests that enforce that a variable has to be a string or an integer; all without the need to write extra code in your application to enforce it:

public function should_only_allow_strings_for_area_codes() {

$this->assertInternalType('string', $this->obj->getAreaCode());

}

Think destruction. The more you can get into the evil mindset of purposely trying to break code, the more test ideas you'll come up with. For instance, think of boundary conditions where things could go awry. Try throwing the date "Feburary 29" at your calendar function (account for leap year, though) or a non ASCII string at your form validator. Create test helper functions that generates random dates, strings, and so on and throw it at your MUTs. Aim to write tests that fail even though you think they should work.

The book Working Effectively with Legacy Code suggests that it's okay to test static methods, as long as they don't have state or nested static methods. A lot of ideas of things to test are lost whenever we hear that something should never be done. As in life, there are almost always exceptions.

Another thing you can test is your ideas and prototypes. TDD is great because, since you're writing your tests first, you can actually design an entire class (or application for that matter) without so much as an internet connection. Hell, you could even design the whole thing on paper, just by thinking of all the test method names. If at Friday at 5:50 p.m. your project manager tells you that first thing Monday morning they want you to create a feature for the admin interface to allow the deletion of users, then by 6:00 p.m. you could have already written the skeleton tests: Should_delete_user(), Should_not_delete_admin(), and so on.

Remember to always watch your tests fail before writing the code to make them pass, because you need to make sure that if it fails the right messages are displayed and so on.

Whenever you get a bug report, start by writing a unit test that exposes the bug before you fix it.

The key isn't to just write more unit tests for the sake of writing more unit tests. The key is quality over quantity. This will also help you avoid the all-or-nothing thinking that prevents some people from ever writing any unit tests. The more meaningful unit tests you have, the more confident you'll feel working with, and using, the entire system.

What about knowing which things mock? Try not to mock third-party libraries and other things you don't have control over because it will create fragile expectations.

As far as database unit testing goes, avoid using DBUnit style integration tests. It's fine to mock database access in order to satisfy Interface Type Hints and the like. I often Stub PDO when working in PHP for example. I also like to use SQLite because in-memory databases allows the unit tests be unit tests (and quick unit tests) and it's just plain nice to be able to delete the database between each test without hurting anything.

That's all for now. As I learn more I'll post more entries. Happy Testing.

Wednesday, August 17, 2011

Some Points on Git vs Subversion

Aside from the obvious points about how "Subversion is centralized and Git is distributed", I thought I'd offer some other places where the two differ - particularly in practicalities. In passing conversations, it's very easy to say that Git is better than Subversion without going into much detail as to why, so I'm going to cover quite a lot here.

In Git, you create your repositories. You don't need to ask the system administrator to do it for you on another server (unless it's to setup a central repository). You create the repo with a simple command:

$ cd proj/ $ git initCompare this to Subversion's way:

$ ssh [svn server] $ sudo svnadmin create /path/to/subversion/repos/proj --fs-type fsfsThe Git repository lives in the top level of your project (proj/.git). So, unlike Subversion, you just have one hidden directory for version control. No .svn directories or anything in every single directory of your project.

Git doesn't use the term working copy like Subversion does. Git uses the term working tree. Since there's no separation of the working tree from the repository there's no copy. Make sense? In Subversion, your repository exists over there on another server. In Git, your repository is right here, in the .git directory inside your project's directory on your work station. This means deleting your git repo is as simple as rm -rf proj/.git.

Git also doesn't use separate file systems that you need to worry about creating or updating. With Subversion, if you create a repository with FSFS it will be a specific version of FSFS. If you upgrade svn, you'll also have to upgrade your Subversion file system to get the new features offered by the new version of the svn software. This requires asking your friendly system administrator to do an svn upgrade on your repositories. Git doesn't break backward compatibility like that.

"That sounds great and all, but doesn't it mean I will have to manage my own backups for each repository I create - rather than relying on my System Administrator to do it for me?" Yes and no. This is where Central Repositories for Git are useful. Even though Git is distributed, it doesn't mean Git can't make use of central repositories. In fact if you're working on a team, you'll probably want to have a central repository to push your changes to and allow others to then pull them. This also serves as a backup.

Git has three main states that your files can reside in: committed, modified and staged. Committed means that the data is stored in your local database; Modified means that you've changed the file but haven't committed to your database yet; and Staged means you have marked a modified file in its current version to go into your next commit snapshot.

This is conceptually similar to how Subversion works, but Git's staging area is far more powerful than how svn does things. Say you create a couple of files. Just like with svn, before you can commit any new files you need to add them:

$ git add .That is what it means in Git terms when someone says to stage your files; You add them to the staging area so Git can be aware of them. Once the files are added, you can commit them:

$ git commit -m "initial import"Once a file has been committed, and you make further changes to it, you will have to stage the file again before you commit it. Fortunately, you can just use the -a option to stage it and commit it at the same time. Edit the file foo and add a line of text blah to it, then:

$ git commit -a -m "Committing blah"Another thing that's great about Git is that it gives you a lot more verbose output when you run commands. Subversion doesn't usually tell you very much about what's happening. For example, Git often tells you which commands you can run in order to undo an operation.

Create two new files called spam and eggs. To see what state the unadded files are in, type:

$ git statusThis is akin to svn status, which shows ? next to unadded files. Git is more verbose and will say the files are untracked. So track them:

$ git add spam eggsNow typing git status will say they are new files with changes ready to be committed. Instead of reverting files, you can unstage them if you don't change your mind and don't want to commit them.

Git is really good about letting you see diffs and times very easily and flexibly. For example, to see what was last committed, both the messages and the diffs, type:

$ git showOne of my favorite commands is:

$ git whatchangedIt will show you a git log with every file tht was changed and how it was changed (M, A, D, etc.) You can even do:

$ git whatchanged --since="2 weeks ago"Like svn log, you can do git log to see all that was committed thus far. You can take this many steps further with Git. For instance, you can see all the diffs for everything with:

$ git log --stat -pTo narrow this output down to just all the commits that rkulla made, do:

$ git log --author=rkullaThis is much nicer than in svn where you end up having to grep the log output. In fact, grep'ing the log output with git can be done with:

$ git log --grep=fooThere's no need to git log | grep foo, which is nice because piping to grep causes you to lose information because it only shows you the lines that contain the exact match.

You can make aliases directly in Git. There's little need to outsource aliases to your command-shell like you need with Subversion. Since there are so many command-line options with Git, I often make custom commands. Take this one:

$ git config --global alias.lol 'log --pretty=format:"%C(yellow)%h%d%Creset %s - %an [%ar]"'This lets me type:

$ git lolto show the git log like you get with git log --oneline, but it's more verbose and shows things like the author and how long ago things were committed.

You can also apply filters to git log. For example to see all files that were ever deleted from the repository:

$ git log --name-status --diff-filter=DThe pickaxe can help you find code that's been deleted or moved (or introduced) based on a string. To use the pickaxe pass -S[string] to git log:

$ git log -SfooThat will show the commit(s) that the string foo was ever in. Because the ncurses based program tig supports all the git options, you can view the list of commits and then see the diffs by hitting enter by first running:

$ tig -SfooWith git, commands like git log, git diff--and others that produce lots of output--will automatically get piped to your pager program (e.g., less(1) or more(1)). With Subversion, you always have to pipe things to less manually.

You can also use git grep to grep for things that exist:

$ git grep 'foo'That will show you all files that have the string foo in them. All without the need to have you specify file names or exclusions; it even recurses into sub-directories automatically. Contrast this with what Subversion would make you go through with:

$ find . -not \( -name .svn -prune \) -exec grep foo {} +

(If you are using Subversion, do yourself a favor and install ack, so you don't have to write find commands like the one above.)

Git's grep command is also powerful enough so that you don't have to write regular expressions as much. Adding -p will show you what functions the matches are in:

$ git grep -np VIDEORESIZE imgv.py=33=def main(): imgv.py:105: if event.type == VIDEORESIZE: ...Moving on to branching differences really quick. Creating branches in Git is much easier than in Subversion. You don't even have to checkout your branch in git after you create it like you do in svn. So instead of doing:

$ svn cp ^/trunk ^/branch/branchname -m "creating branch" $ svn switch ^/branch/branchnameYou can just do:

$ git branch branchname $ git checkout branchnameThat's it. Or even easier:

$ git checkout -b branchnameTo create the branch and switch to it at the same time.

Deleting a branch is as easy as:

$ git branch -d branchname(You do delete your branches when you're done with them, don't you?)

Git supports merging between branches much better than Subversion does. Git keeps track of much more history to make it a smooth operation, and the command is easier to type. Once you're on one of the branches to be merged, you can merge the other one with:

$ git merge [branch]If there are no conflicts it even commits automatically for you. Though you can tell it not to commit for you with --no-commit.

Aside from merging, sometimes you just want to grab a commit from a different branch and apply it to your current branch. This is called cherry picking and in git the command is appropriately named:

$ git cherry-pick [revision]Compare this with Subversion, which has a much less intuititive way of doing this:

$ svn merge -c [revision] [url] $ svn ci -m "cherry picked [rev]As you may have guessed by now, creating a tag with Git is as easy as creating a branch:

$ git tag -a tagnameAnd you can list which tags you have as simple as:

$ git tag -lAnother thing Git can do that Subversion can't is stashing. For those times when your changes are in an incomplete state and you're ready to commit but you need to temporarily return to the last fresh commit, you can push all your uncommitted changes onto a stack. See the documentation for how to do this.

Moving on, moving on... Okay, how about reverting? To do the equivalent of "svn revert -R ." (revert all local, unstaged, changes):

$ git reset --hard HEADRolling back a commit is as easy in git as:

$ git revert HEADIt will even fill in the commit message for you with "reverting [whatever your last commit message was]" along with the SHA hash of the commit.

You can even pick specific commits to undo with:

$ git revert [hash]Oh yes, git lets you easily change commit messages, too. Say you have a post-commit hook script that looks for the string "bug #nnnn" in commit messages--in order to create a list of files in the corresponding ticket number in your bug tracker. Well, what if you forget to input that special syntax into your commit messages? With Git, you could just:

$ git commit --ammendWhich will open your editor and let you change the commit message. Once you close your editor, it's done. It even changes the commit messages automatically for any reverts associated with the commit to say "reverted [new message]"! Good luck doing that with Subversion. If you need to modify multiple commit messages, or a commit messages several commits back, look into using Git's interactive rebase.

Moving onto deployments now... Git has archiving features built in. For example, you can create a tar of the latest revision using:

$ cd my-proj $ git archive -o /tmp/my-proj.tar HEADYou can create a tarball with:

$ git archive HEAD | gzip > /tmp/my-proj.tar.gzSay you want to zip up just the documentation of your project:

$ git archive --format=zip --prefix=my-docs HEAD:docs/ > /tmp/my-docs.zipNow when you unzip my-docs.zip it will unpack a directory called my-docs with your documentation.

This list of comparisons is getting really long, so I'm going to stop now. Feel free to add your own additions in the comments section below.

Sunday, August 14, 2011

Getting Rid of Cable TV

I recently realized I was paying $160.00 a month for cable TV and Internet, after a promotion I had expired. My bill was $110 before that, which was really still too much. I just had up to 18mbps downloads and 1.5 uploads, and my cable was rather basic and didn't include HBO, Cinemax or Showtime. If you were wondering if it's worth ditching cable or satellite TV, read on!

I just decided to ditch cable TV all together and just have internet (up to 18 mbps downloads for $53.00 a month). My provider, AT&T UVerse, didn't have any other promotions right now that sounded any good. The best they could do to lower my bill was jump me down to 70 channels (Family Plan) for $82 a month + taxes and surcharges (and those taxes and surcharges end up being $15-20 in California), so I'd be right back at ~$100 a month.

In the living room I already have an Xbox360 (which are capable of Netflix streaming. EDIT: XBox 360 is also capable of Hulu Plus!), a stand-alone Sony blu-ray player and Boxee Box. The Boxee Box is great because it streams any downloaded file such as .avi, .mkv, .mp3, etc, and can stream Netflix and comes with free streaming channels like Southpark, Youtube, NasaTV, Tech Podcasts, HGTV, News channels, and much more). What's also great about the Boxee Box its that it never has a problem finding my network. I just right-click a folder on any of my computers (be them Linux, Windows or Mac), share the folder, and bam, it shows up in Boxee Box instantly.

For the bedroom TV, since I would only have had a DVD player after getting rid of cable TV, I decided to get a "Roku 2 XS" player (capable of netflix, hulu, amazon prime, games like angry birds, etc). It was only ~$90 and I signed up for Hulu Plus. Note that they also make $59 versions of Roku boxes that are almost as good.

Even if that all that ends up not being enough content, and I decide to reactivate my Netflix account, that will still only be $53 (internet) + $8 (hulu plus) + $8 (netflix) = $69 a month. But since I don't have any plans to ever go back to Netflix, I expect my monthly internet/"pseudo TV" bill to only be about $61.00.

So I'm saving $90-100 a month by getting rid of Cable TV. The Roku will have paid for itself the first month alone. Plus with all that savings I can upgrade to Spotify Premium and maybe get a Squeeze Box and still be saving money; and I'm way better off than I ever was with cable TV that had a ton of channels I never watched. With a modern internet connection I have yet to even have the Roku or Boxee have any bothersome "buffering" problems even while streaming from three devices at once.

One other thing to consider is that when you give up cable, you have to give them back your cable boxes (set top boxes). Since they usually come with a universal remote control that you can no longer use, I recommend purchasing a good univeral remote, which you can get from almost any department or electronic store. I recommend at least a Logitech Harmony 650, which can control at least 5 devices at once and lets you set up macros to automatically turn on your tv, device(s), and switch to the appropriate HDMI port. The harmony line should even partially control your XBox 360, Boxee Box, etc, believe it or not. So look to spend at least $50-100 on a good universal remote.

For any other content I might be missing there's always iTunes (I'm always getting gift cards), RedBox, video streaming apps for iPhone/iPad, and so on. For HBO and Showtime shows that I love to watch, I mostly just wait until the season of the series completes so I can watch them all at once and without commercials.